What is a Large Language Model?

Part 1: Stochastic generation of cultural artifacts, from Aristotle to the Eighties

When you “converse” with one of today’s generative AI models, behemoths like Claude 4, ChatGPT 4, or Gemini, you’re interacting with a powerful computer program that has one and only one intention: to accurately predict what word (technically what “token”) you might expect to see next. And then to predict the next word after that, and the next, and thus generate something you perceive as “meaning.”

That’s it.

Well, that’s not really it. These programs can do quite a bit more, including things like sentiment analysis (judging the emotions present in a piece of text), entity extraction (finding structured data like names and addresses), summary and synthesis, reasoning and problem-solving. Though in essence all they are doing is generating one next word after another, this is a bit like saying that an orchestra is simply playing one note after another.

But we might start by asking — how does it even do that simple thing? The next-word prediction thing? Even if that’s a paltry way to describe the behavior of these behemoths.

To describe how it happens today — that takes some explaining. But in this post, I can tell you how it sort of got started — back in the day.

1. 1974

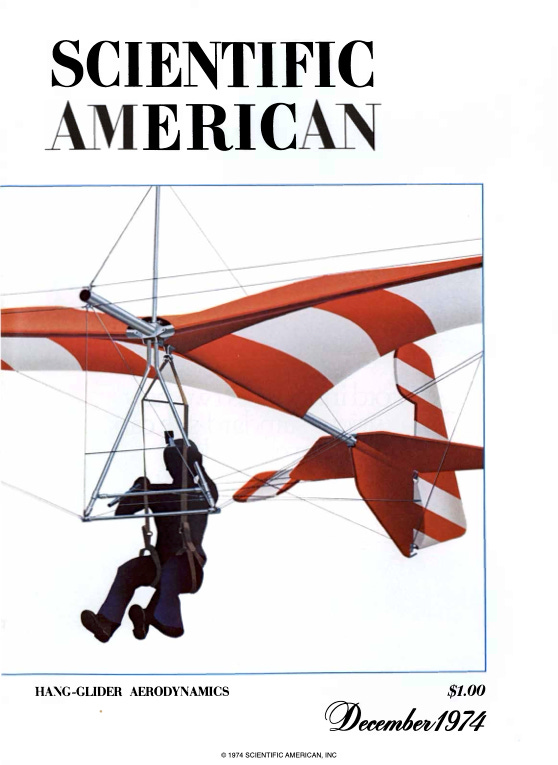

The idea of the algorithmic generation of things that look like human cultural artifacts goes back a long way.1 I know exactly when I personally became aware of the idea: December 1974, when my nine-year-old self, perusing my beloved Scientific American (that my parents had sent to the house!), came across a“Mathematical Games” column2 by Martin Gardner that detailed the history of attempts to generate music by algorithmic means.

I’d never laid eyes on a computer (that would happen a couple of years later when Radio Shack’s TRS-80 hit the classroom), but as a piano-playing youngster with science and math leanings, I was somehow held rapt by the idea that human-like cultural artifacts could result from mathematical generation. Gardner’s span of reference was wide, encompassing Borges, Ramón Lull, the music theorist Kirnberger, Fourier series, and a wide range of books and articles on the generation and playing of music by digital computers. (15 years ago this might have amounted to a few hours of Internet research; today an LLM could pull most of the references together in a minute or two; having been a researcher myself before the dawn of the Internet, I can appreciate the weeks of effort wrapped into Gardner’s rich four-page essay). In passing, Gardner touched on ideas that seem trivial now but were more edgy then — the idea that any existing cultural artifact, like a painting or symphony, could be digitized, and so in theory become the product of generation by algorithm.3 This stuck in my mind and I could not get it out.

2. 1983-84

Nine years later computers had hit the classroom and the home — we had a lab full of Commodore PETs and TRS-80s, and one of my friends owned an Apple II (the main use of which was playing Apple Panic). My parents still got Scientific American, and in November 1983, a “Computer Recreations” column by Brian Hayes promised “a progress report on the fine art of turning literature into drivel.”4

Hayes was reporting on a class of programs called “travesty generators,” that take some body of text (say the works of Shakespeare) as their input, and output a nonsense text that nonetheless “sometimes has a haunting familiarity.” Critically for later machine learning, it does this by mimicking some of the statistical properties of the text. Hayes’ reflections are worth quoting fully:

“I cannot imagine a cruder method of imitation. Nowhere in the program is there even a representation of the concept of a word, much less any hint of what words might mean. There is no representation of any linguistic structure more elaborate than a sequence of letters. The text created is the clumsiest kind of pastiche, which preserves only the most superficial qualities of the original. What is remarkable is that the product of this simple exercise sometimes has a haunting familiarity. It is nonsense, but not undifferentiated nonsense; rather it is Chaucerian or Shakespearian or Jamesian nonsense. Indeed, with all semantic content eliminated, stylistic mannerisms become the more conspicuous. It makes one wonder: Just how close to the surface are the qualities that define an author's style?”

How close indeed? The answer, it turns out, would come soon enough, in the first quarter of the 21st century, when neural-network programs came into their own. Nonetheless, what Hayes found remarkable was an emergent property of the statistical characteristics of the source text — even a very simple computer program, it turns out, could sound Shakespearian, even if could not mean Shakespearian.

Not yet.

3. A detour into older history

Like Gardner, Hayes alluded to the centuries-old history of human speculation on algorithmic generation; as long ago as 1690, John Tillotson, Archbishop of Canterbury, wondered aloud how often one would have to scatter a bag of random letters before they created (wrote?) a poem. And of course the army-of-monkeys theory of random text generation (sometimes cited as “700 monkeys would eventually write Shakespeare”, while Sir Arthur Eddington speculated in 1927 that they might write “all the books in the British Museum.” This all leads to musings on the nature of meaning — if a computer program produces a random text that happens to correspond to Don Quixote word for word, did it write it? Is it the same work? Is it a “work” at all?

Jorge Luis Borges, whose fiction anticipated many of these questions, ponders similar matters in one of my favorite of his stories, “Pierre Ménard, author of the Quixote.” The fictional Ménard is a minor French literary and intellectual figure of the 20th century, who has conceived of the ambition of writing Don Quixote; not transcribing it, not copying, but going through an arduous process of conception, writing and revision, whose final product would not copy the Quixote, but coincide with it; somehow to arrive, by independent means and his own generative process, at exactly the same sequence of letters as the Spanish Cervantes set down three centuries prior. In his lifetime, according to the story, he managed this feat for two chapters of the Quixote and a fragment of a third. Borges invites us to consider what a “literary work” really consists of. Is it merely the sequence of letters on a page? In which case a machine-produced work that coincides with some or all of the Quixote is just as much “literature” as what Cervantes wrote. Does it include the author and her/his generative process? In which case, Ménard’s text, though character-for-character identical to Cervantes’, is nonetheless original and unique. “Cervantes’s text and Menard’s are verbally identical,” writes Borges, “but the second is almost infinitely richer.”

In his story “The Library of Babel,” Borges posited the existence of a library containing every book that could be written in 410 pages of 40 lines of 80 characters each, using an alphabet of 25 characters. Most of the books are naturally gibberish, but included among them must also be an infinite number of highly intelligible (i.e. meaningful) texts, including, presumably, the first 410 pages of the Quixote itself, or something arbitrarily close to it.

In fact, the likelihood of a truly random process producing even a single intelligible sentence is absurdly small — trillions of universes of monkeys typing for trillions of times the life of the universe would still have only an infinitesimal chance of typing even a portion of Hamlet.

All of this thinking circled again and again around the question: if an algorithmically-generated sequence of letters happens to correspond to something meaningful in human language, is it really a “sentence?” Does it mean? Does it have an author?

LLMs bring all of those questions to the forefront once more.

(Interestingly (or maybe inevitably?), sandwiched in the Hayes article was an Electronic Arts ad for their new Pinball Construction Set game, provocatively titled “Bill Budge wants to write a program so human that turning it off would be an act of murder.” “A software friend,” the ad went on. “It sinks in slowly … The idea is probably ten years away from actuality.” I had never heard of this game but apparently it was revolutionary in its time.)

4. A real travesty

Haye’s article, as well as a 1984 followup article in Byte by Hugh Kenner and Joseph O’Rourke5, was all about the art of programming a “travesty generator” — a computer program, as noted, that ingests a source text, and produces a simulated output text that mimics certain statistical patterns in the original. The result, as Hayes noted, was nonsense, but nonsense that is somehow Shakespearean.

How do they work? The answer gives some insight into how later generations of such programs, like today’s LLMs, approach things — in fact, travesty programs are not infrequently cited as early precursors of later natural language processing techniques.

When we say a synthetic text is randomly generated, that’s misleading. These generated texts are patterned on human-written text that is emphatically not random in their characteristics. As an example, consider the sequence of characters elephan_. What letters (in English) could occupy the blank spot? Only the letter t. (From an information-theory perspective, that means that the letter t in that position adds no information to what we already knew. Its information content is effectively zero, a fact elucidated by Claude Shannon in his studies of information entropy, as well as a fact that underlies why lossless compression programs like zip exist, and why they’re so effective on human texts — a topic which could be an entire post of its own). In any case, if we know something about English, we’ll know that the letters elephan will necessarily be followed by t.

Let’s consider a source text, like the complete works of Shakespeare. Consider it as a series of words rather than letters. What’s a word we tend to find in Shakespeare? How about thou. Now write a computer program that scans the complete works of Shakespeare and finds every instance of the word thou — as well at the word that follows it. What we’ll discover is that out of the 20,000 or so unique words in Shakespeare, only a subset of them can follow the word thou, and of those, only a handful are prevalent — the most common, of course, being art, followed by hast, shalt, and wilt.

These word-pairs — thou hast, thou shalt, thou wilt — are called “2-grams” in the parlance: units of two words. In fact thou-constructions are so common in Shakespeare that there are over 1000 unique 2-grams starting with thou, but the vast majority of these appear only once, with a very small handful like thou hast, thou shalt, and thou wilt accounting for about a quarter of all occurrences.

Here’s a sample of what part of that dictionary might look like:

Now imagine you compile a comprehensive list of 2-grams in Shakespeare. This requires two steps:

1. Find every unique word in Shakespeare (this process, called tokenization, has some wrinkles we’ll skip over, like whether capitalized and uncapitalized versions of a word count as the same or different words, and whether punctuation counts as a word)

2. For each unique word, compile a frequency table of all following words, according to the text, as well as the frequency with which each following word (the second part of the 2-gram) appears. The table will look the one above, only likely longer.

Once you have this data set, called an n-gram dictionary, you can begin to generate Shakespeare randomly — though since the distributions are anything but random, a better word might be stochastically — referring to any process that incorporates chance in its outcome. The algorithm is as follows:

1. Pick a starting word — either choose at random from the dictionary, or pick only from capitalized words.

2. Once you have this word, consult the dictionary to see which words can possibly follow it, according to the distributions in the original source.

3. Pick a word from the possible following words by probability generation. Think of this as a many-sided dice roll that takes into account the probability distribution of following words — so if the first word is thou, there’s a 1 in 4 chance or so that the next word selected will be art, shalt, wilt, dost, hast, or canst — and there is also a chance, albeit a much smaller one, that it’ll be infamonize, bloodier, or possessed (according to the dictionaries I generated with my own travesty program, about which more in another newsletter.)

4. Move to the word you just selected, take it as the base of the next 2-gram, return to step 1 and repeat for as many words as you care to generate.

A Shakespeare travesty generated at the level of 2-grams (more on what I mean by that in a minute) is, well, a travesty, yet still somehow Shakespearean:

Shall anything thou me, good youth, ... Askance their virtue that virtue ... Follower of Diana

Here, here. ... _ Aside. [ _ He wants no breach on’t,

Into his kingdom

(Duke himself has deceiv’d, you are by famine, fair son of outlawry

Find little. Support him hold. By him be new stamp, ho. _ Anglish. You must, henceforward all unwarily

Stand, in their conquered woe doth fall. Is not out

Cheater, Caius Ligarius, that shallow,A bit like one imagines listening to Shakespeare themself6 muttering in their sleep.

A similar effort on the combined works of Dickens, again based on 2-grams:

Whether Mrs Chick,'If you are threefold:

As Mr. Bridgman having refreshed herself to be!'... Labour; with two tea, in torment, ... Suffocated!--Mr. Two before Lent; but I do justice on the rest, to be heartier. ... OBSERVE

Mr. Wickfield seemed to know whether it.'continued Mr. Winkle. Charles 's countenance by the Court of this, glasses before we

onions, Monsieur the Worshipful the little

hat to sneak'Is she drew a beauty; even know

glass. It from AgnesEqually nonsensical, yet clearly more Dickensian than Shakespearean.

The math and statistics being used here are very, very simple — brute force word counts and probabilities, looking at no linguistic structure larger than a word pair. Yet already there is some coherence and sense of character. So, we can deduce an apparent principle even from this simple analysis:

Principle 1: Something about the style and flavor of a text can be captured even by simple statistical analysis of the kind embodied in 40-year-old computer programs.

Can we increase the complexity of our statistical analysis and get better results? Yes, we can. The answer is to increase the size of our n-grams.

Suppose that instead of looking at words in pairs, we look at them in triples, and base each word on the two words that precede it. So instead of looking just at thou and deciding the next word, we’d look at thou art instead. We now need a dictionary with entries not for each unique word in Shakespeare, but each unique word pair. Then for each word pair, we’ll have a list of all the words that can follow that pair, again with respect just to Shakespeare’s corpus.

This leads to a bigger dictionary — over 330,000 entries, instead of just 20,000 for single words. At the same time, the number of possible following words shrinks — so the word pair thou art has only 180 unique following words in Shakespeare, with the most common combinations being thou art a, thou art not, and thou art so.

Once we’ve built this new 3-gram dictionary, generating the travesty text is a similar endeavor — pick an existing two-word pair from the dictionary to start, then generate the following word probabilistically based on the possible following words in the dictionary. Suppose we decide the next word will be so. Then the travesty opens thou art so. Then we consider the word pair art so, look at what words might follow that word (near, far, fat, full and fond are all candidates), and repeat until we have as much travesty as we want.

Here is a third-order Shakespeare travesty:

Now is it? How seems he to give them seals never, never kept seat in one woman, I must embrace the fate

And that same dew, which drives

O’er lawyers ’ fingers, who seem no less flowing

Than stand uncovered to the Centaur, fetch my gold again. _ ] Hie thee again. ... LORD GREY, son of Marcus Cato, Lucilius! BASSANIO. I pray you, master! Grave sir, but I marked him

sixpence a day in Germany. FALSTAFF. ... Rite? I PandulphStill nonsense, but slightly more coherent somehow.

This increase in order can be repeated at levels of 4, 5, 6, 7 or whatever we choose. In practice, any order of 6 or greater starts to simply repeat large chunks of the source, since even in a corpus as large as Shakespeare’s, any given sequence of six or seven words is likely to be unique and have only one possible following word. Here is “Shakespeare” at orders 4-6:

Shakespeare order 4:

This new - married wife about her husband ’s occasion, let her live,

Where every something being blent together,

And that tomorrow you will bring the device to the bar, crying all “ Guilty, ” Cardinal,

With charitable bill(O bill, sore shaming

Those rich - left heirs that let their fathers lie

Without a monument!) bring thee all this;

And, at that hour, my lord, ’ tis a cause that hath no music in three parts. PROTEUS. SirShakespeare order 5:

The learned and authentic fellows, —

PAROLLES. _ Mor du vinager! _ is not this Helen? LAFEW. O, train me not, sweet mermaid, with thy note

To drown me in thy sister ’s flood of tears. Come home with me to dinner. Besides

your cheer, you shall have sport: I will show you shining at this feast,

Having fully dined before. Enter Titus Lartius with his power,

To fight against that guilty homicide. HERBERT. IShakespeare order 6:

Ballad us out o ’ tune. The quick comedians

Extemporally will stage us and present

Our Alexandrian revels; Antony

Shall be brought drunken forth, and I shall see

Some squeaking Cleopatra boy my greatness

I ’ th ’ absence of the needer. CORIOLANUS. [ _ Removing his muffler _. ] If, Tullus,

Not yet thou know’st me, and, seeing me, dost not

Think me for the man I am, necessity

Commands me name myself. AUFIDIUS.At order 6, the first five and half lines quote verbatim a speech of Cleopatra, then pivot from her I’ th’ posture of a whore to I’ th’ absence of the needer, from a speech by Cominius, from Coriolanus. At this point the algorithm mostly reproduces the original in chunks. In order for a level-6 travesty to behave otherwise, Shakespeare’s corpus would need to be much, much larger, so that a given six-word sequence occurred multiple times, with multiple possible following words. Dicken’s corpus is about 2.5 times bigger than Shakespeare, but doubling isn’t nearly enough — this sixth-order travesty of Dickens is mostly a long quotation from A Tale of Two Cities:

Shivered at every corner, passed in and out at every doorway, looked from every window, fluttered in every vestige of a garment that the wind shook. The mill which had worked them down, was the mill that grinds young people old; the children had ancient faces and grave voices; and upon them, and upon nothing else. But, astounded as he was by the apparition of the dwarf among the Little Bethelites, and not free from a misgiving that it was the lady - lodger, and noThis leads to another principle or finding:

Principle 2: to create more authentic simulacra of human texts, bigger inputs are needed — you need to run higher-level statistical analysis on larger, indeed much larger, bodies of text.

These two principles — that human style (at least) can be captured via statistical analysis, and that the quality thereof improves with more processing power, more data, and deeper analysis, were evident over 40 years ago. They underpin, spiritually if not directly, modern natural language processing via neural networks, which is at the heart of today’s LLMs.

More in part 2, but in between, I’ll describe what it was like (during the course of a single plane flight) to resurrect the travesty programs I wrote in the 90s using one of today’s AI coding agents — a post that could be, and maybe will be, titled simply Oh Shit.

Disclosures: I try not to let AI do my writing for me. I had Claude look this piece over once I was done with it — Claude, like a good editor, recommended a slightly more explicit look-ahead at the end, and urged me to say a bit more about Borges’ “Pierre Ménard,” both suggestions I was happy to take up.

See Borges’ essay “The Total Library,” anthologized in Selected Non-fictions (1999). Edited by Eliot Weinberger. New York: Viking

Martin Gardner, “Mathematical Games,” Scientific American, December 1974, pp. 132-136.

Brian Hayes, “Computer Recreations,” Scientific American, November 1983, pp. 18-28.

Hugh Kenner and Joseph O’Rourke, “A travesty generator for micros,” Byte, November 1984, pp. 129-131, 449-469

Current scholarship slants away from debating Shakespeare’s biological gender to arguing for their essential queerness.

Fascinating, all stuff completely unknown to me. Love the personal memories, the images from old magazines, etc. The magazines especially now seem so . . . bygone.

Three points:

1. In reaction to "As an example, consider the sequence of characters elephan_. What letters (in English) could occupy the blank spot? Only the letter t. From an information-theory perspective, that means that the letter t in that position adds no information to what we already knew. Its information content is effectively zero . . ."

Is that necessarily true? Think about some letters that might be used. From elephanx, one might infer that we were looking an effort to talk about this species in a non-binary way. Elephanz might imply something in a hip-hop direction. Elephanh looks vaguely archaizing, elephanj exoticizing in a Persian direction--both, along with others, possibly evoking a fantasy-novelesque usage. (Remember Tolkien's "oliphaunt.") And so on.

Might some suggestion arise here of the difference between word-production, and word-play--with some of what that implies (please forgive me, or at least bear with me) of anti-capitalist, anti-modernist critique?

2. Speaking of word-play, I was struck by how much better I liked 2-gram Shakespeare than 2+n-gram Shakespeare. The first gave me such pleasure, I laughed out loud with delight. If a parodist (human or LLM) had written it, I would have thought, "this is absolutely brilliant, completely spot-on." (The random mention of "Diana" may be key: when writing Shakespeare, if in doubt, stick in a random classical deity.) But 3, 4, and 5 gram seemed . . . dreary? From them no pleasure did I take, anyway. 6-gram wasn't much better, but it did inspire me to think, "I need to read more Shakespeare!"

3. Do LLMs engage in play, word-play, or parody? From one who engages with these systems a lot, I get the sense they do, or can. I have never conversed with one. The idea makes me nervous.